Automated Web Scraper With Python & Windows Task Scheduler

Flipnode on May 05 2023

To automate a Python web scraping script, it needs to run periodically. One way to do this is through Python, but a more accessible option is available. MS Windows has a built-in tool, Windows Task Scheduler, for automatic application running. In this article, learn how to set up Task Scheduler to schedule a Python script automatically.

Guide to running a Windows Task Scheduler Python Script

To avoid common errors, prepare your web scraping script by following these guidelines before configuring the Task Scheduler.

Preparing a Python script

If you're preparing a Python script, there are three essential tips you should follow for optimal results:

- Employ a virtual environment to ensure that your Python web scraper runs smoothly by providing the correct Python version and all necessary libraries.

- Use absolute file paths to avoid any missing files that could break your script's functionality.

- Implement loggers to redirect output to a file, for more organized and manageable results.

Once you've imported the logging module, configuring logging can be done in just one line of code:

logging.basicConfig(filename=r"c:\scraper\output.log", level=logging.DEBUG)

Once configured, you can write informational messages to the log file like so:

logging.info("Insert your informational message here.")This enables you to keep a record of important events and debug your script more effectively. You can read more about logging in this article.

Python script example

This article utilizes the following Python web scraping script:

from bs4 import BeautifulSoup

import requests

url = 'https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html'

response = requests.get(url)

response.encoding = "utf-8"

soup = BeautifulSoup(response.text, 'lxml')

price = soup.select_one('#content_inner .price_color').text

with open(r'c:\scraper\data.csv', 'a') as f:

f.write(price + "\n")

With each run of this script, the latest price is appended to a new line in the CSV file. This enables you to keep a record of pricing data for analysis and further manipulation.

How to create a batch file to run the Python script

Although it is possible to run a Python script without creating a batch (.bat) file, it is highly recommended to create one as it provides better control over running the scraper.

Here's an example of what a typical batch file might look like:

cmd /k "cd /d C:\scraper & venv\Scripts\python.exe price.py"

By utilizing a batch file, you can easily execute the script with a single click, specify command-line arguments, set environment variables, and more. This improves your workflow and makes it easier to manage your Python scripts.

It's important to note that this command consists of two parts - the first being the command "cmd", and the second containing multiple commands separated by semicolons.

Here's a brief breakdown of the command:

- "cmd /k" creates a new command-line environment, executes the specified commands, and then terminates them.

- "cd /d c:\scraper" changes the current directory and drives to the folder where the Python executable is located.

- "venv\Scripts\python.exe price.py" runs the Python web scraper.

By changing the directory to the correct location, you can use relative file paths instead of specifying the full path of the Python executable and script file. Skipping this step would require you to use the full path for each command, which can be time-consuming and error-prone.

How to set up Windows Task Scheduler to run a Python script

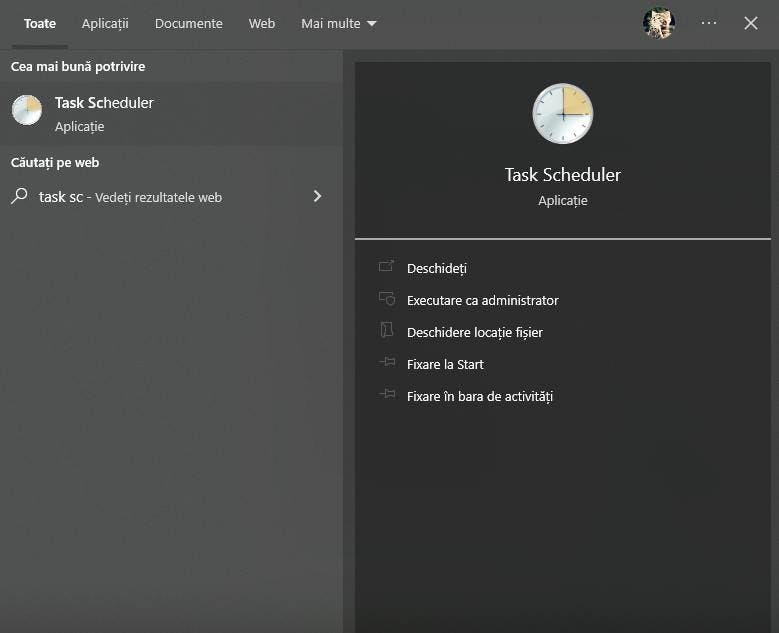

After you've created the batch file, you can locate the Task Scheduler by performing a search in Windows.

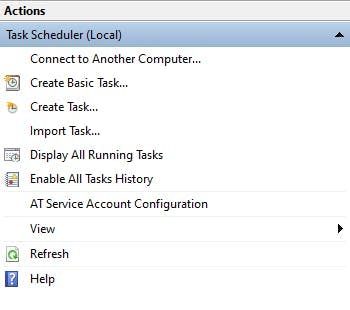

Select "Create Task" from the right panel of the Task Scheduler.

It's worth noting that you should avoid selecting "Create Basic Task" due to its limited options.

Instead, open the "Create Task" window, which offers a range of settings across five tabs - General, Triggers, Actions, Conditions, and Settings.

The General tab contains two important settings to check. Firstly, you need to give your task a name that helps you remember its purpose.

The "Run whether user is logged on or not" setting is also crucial. Enabling this option ensures that your web scraper will run even when you are not logged in. Please note that selecting this option will require you to enter your password at the end of the task creation process.

Next, navigate to the Triggers tab and click on the "New" button to open the "New Trigger" window.

For instance, if you want the web scraper to run every hour, select "Daily" in the Settings. In the Advanced settings, choose "Repeat task every" and set it to 1 hour.

To handle errors, select "Stop task if it runs longer than" and set an appropriate value.

Ensure that "Enabled" is selected and click "OK" to save the trigger.

After that, open the "Actions" tab and click "New" to open the "New Action" window.

In this window, select "Start a program" as the action and provide the full path of the batch script.

As mentioned earlier, the batch file has only one line:

cmd /k "cd /d C:\scraper & venv\Scripts\python.exe price.py"

Note: If there are spaces in your path, enclose the entire path in double quotes.

If you prefer, you can run the Python script directly without using a batch file.

In the Program/script field, enter the complete path of the python.exe file. In the Add arguments textbox, specify the complete path of the Python script. Once again, if any of these paths have spaces, enclose them in double quotes.

Finally, if you are using relative paths in your code, it is advisable to set the Start in field to the folder where your Python script is located. You can skip the Conditions tab and go directly to the Settings tab. The recommended settings are highlighted below.

The settings provided are optional and straightforward. You can choose to accept the default settings and proceed by clicking OK.

After you click OK, the Windows credentials prompt will appear. Enter your username and password, and click OK to save the task. The web scraper will run during the next scheduled execution.

Common reasons why Windows Task Scheduler Python script isn't running

To resolve the most common issue of Task Scheduler being unable to find the python.exe file, you can find the complete path of the Python executable file by running the following command in the Command Prompt:

where python

After running the "where python" command in Command Prompt, you'll see one or more lines displaying the full path of the Python executable, like so:

C:\scraper>where python

C:\Program Files\Python310\python.exe

C:\Users\codeRECODE\AppData\Local\Microsoft\WindowsApps\python.exe

Make a note of the path to the Python executable you want to use. If you're not using virtual environments, you'll need to provide the full path to the python.exe file.

It's important to use absolute paths when working with the Task Scheduler, since relative paths may not work as expected. And don't forget to enclose paths in double quotes, especially if they contain spaces or special characters in file names. This can help avoid problems with executing the script.

Other web scraping automation alternatives

If you're using macOS or Linux, Cron is the primary alternative to Windows Task Scheduler. Although Cron does not have a graphical user interface, it can perform nearly all of the same tasks as the Task Scheduler. To use Cron, simply run the command crontab -e from the terminal and provide the necessary details. For a detailed guide on using Cron, check out our blog post on the topic.

In addition to Cron, there are other Linux-specific tools available on various Linux distributions, such as systemd (pronounced system-d) and Anacron.

Conclusion

Automating Python web scraping scripts using the Windows Task Scheduler can be a convenient alternative to using an in-Python task scheduler, as it is already built into most PCs running Windows. It allows users to easily schedule tasks to run at predefined intervals, even when the computer is in sleep mode.

However, if you are using macOS or Linux, the Cron tool provides a similar automation solution, albeit without a user interface.